Today we’re going to talk about validation. Most people have some concept where they validate an entity object before it is saved to the database. There are many ways to implement this, and I’ve finally found my favorite way of writing validation code. But what I think is really interesting is the thought process of the many years of validation refactoring that have got me how I do validation today. A lot of this has to do with good coding practices that I’ve picked up over time and little tricks that allow me to write better code. This is a really good example of how I’ve learned to write better code, so I thought I’d walk you through it (and maybe you’ll like how I do validation too).

In the past, I would’ve created one method called something like Validate() and put all of the validation rules for that entity inside that method. It ended up looking something like this.

public class Order

{

public Customer Customer { get; set; }

public IList Products { get; set; }

public string State { get; set; }

public decimal Tax { get; set; }

public decimal ShippingCharges { get; set; }

public decimal Total { get; set; }

public ValidationErrorsCollection Validate()

{

var errors = new ValidationErrorsCollection();

if (Customer == null)

errors.Add("Customer is required.");

if (Products.Count == 0)

errors.Add("You must have at least one product.");

if (State == "OH")

{

if (Tax == 0)

errors.Add("You must charge tax in Ohio.");

}

else

{

if (ShippingCharges > 0)

errors.Add("You cannot have free shipping outside of Ohio.");

}

return errors;

}

}

The problem with this approach is that it’s a pain to read the Validate() method. If you have a large object, this method starts getting really cluttered really fast and you have all kinds of crazy if statements floating around that make things hard to figure out. This method may be called Validate(), but it’s not telling much about how the object is going to be validated.

So how can we make our validation classes more readable and descriptive? First, I like to do simple validation using attributes. I’m talking about whether a field is required, checking for null, checking for min/max values, etc. I know that there is a certain percentage of the population that despises attributes on entity objects. They feel like it clutters up their class. In my opinion, I like using attributes because it’s really easy, it reduces duplication, it’s less work, and I like having attributes that describe a property and give me more information about it than just its type. On my project, I’m using NHibernate.Validator to give me these attributes, and I’ve also defined several new validation attributes of my own (just open NHibernate.Validator.dll in Reflector and see how the out-of-the-box ones are written and you’ll be able to create your own attributes with no problems). Now my class looks more like this:

public class Order

{

[Required("Customer")]

public Customer Customer { get; set; }

[AtLeastOneItemInList("You must have at least one product.")]

public IList Products { get; set; }

public string State { get; set; }

public decimal Tax { get; set; }

public decimal ShippingCharges { get; set; }

public decimal Total { get; set; }

public ValidationErrorsCollection Validate()

{

var errors = new ValidationErrorsCollection();

if (State == "OH")

{

if (Tax == 0)

errors.Add("You must charge tax in Ohio.");

}

else

{

if (ShippingCharges > 0)

errors.Add("You cannot have free shipping outside of Ohio.");

}

return errors;

}

}

When I put these attributes on properties, I don’t write unit tests for that validation. If I was really concerned about whether or not I put an attribute on a property, I could spend 2 seconds going and actually checking to see if that attribute was on the property instead of spending 2 minutes writing a test. It’s just so easy to use an attribute that it’s hard to screw it up. I haven’t been burned by this yet. So already I’ve eliminated some validation code that was cluttering up my validation methods and I eliminated some tests that I would’ve otherwise written.

But my Validate() method still looks messy, and it’s doing a bunch of different validations. The method name sure isn’t telling me anything about the type of custom validation that is being done.

Let’s write some tests and see where our tests might lead us.

[TestFixture]

public class When_validating_whether_tax_is_charged

{

[Test]

public void Should_return_error_if_tax_is_0_and_state_is_Ohio()

{

var order = new Order {Tax = 0, State = "OH"};

order.Validate().ShouldContain(Order.TaxValidationMessage);

}

[Test]

public void Should_not_return_error_if_tax_is_greater_than_0_and_state_is_Ohio()

{

var order = new Order { Tax = 3, State = "OH" };

order.Validate().ShouldNotContain(Order.TaxValidationMessage);

}

[Test]

public void Should_not_return_error_if_tax_is_0_and_state_is_not_Ohio()

{

var order = new Order { Tax = 0, State = "MI" };

order.Validate().ShouldNotContain(Order.TaxValidationMessage);

}

[Test]

public void Should_not_return_error_if_tax_is_greater_than_0_and_state_is_not_Ohio()

{

var order = new Order { Tax = 3, State = "MI" };

order.Validate().ShouldNotContain(Order.TaxValidationMessage);

}

}

[TestFixture]

public class When_validating_whether_shipping_is_charged

{

[Test]

public void Should_return_error_if_shipping_is_0_and_state_is_not_Ohio()

{

var order = new Order {ShippingCharges = 0, State = "MI"};

order.Validate().ShouldContain(Order.ShippingValidationMessage);

}

[Test]

public void Should_not_return_error_if_shipping_is_0_and_state_is_Ohio()

{

var order = new Order { ShippingCharges = 0, State = "OH" };

order.Validate().ShouldNotContain(Order.ShippingValidationMessage);

}

[Test]

public void Should_not_return_error_if_shipping_is_greater_than_0_and_state_is_Ohio()

{

var order = new Order { ShippingCharges = 5, State = "OH" };

order.Validate().ShouldNotContain(Order.ShippingValidationMessage);

}

[Test]

public void Should_not_return_error_if_shipping_is_greater_than_0_and_state_is_not_Ohio()

{

var order = new Order { ShippingCharges = 5, State = "MI" };

order.Validate().ShouldNotContain(Order.ShippingValidationMessage);

}

}

These tests are testing all of the positive and negative possibilities of each validation rule. Notice that I’m not checking just that the object was valid, I’m testing for the presence of a specific error message. If you just try to test whether the object is valid or not, how do you really know if your code is working? If you test that the object should not be valid in a certain scenario and it comes back with some validation error, how would you know that it returned an error for the specific rule that you were testing unless you check for that validation message?

I could just leave the validation code in my implementation class as is. But I’m still not happy with the Validate() method because it has a bunch of different rules all thrown in one place, with the potential for even more to get added. If I just refactor the code in the method into smaller methods, it’ll read better. So now I have this:

public class Order

{

public const string ShippingValidationMessage = "You cannot have free shipping outside of Ohio.";

public const string TaxValidationMessage = "You must charge tax in Ohio.";

public Customer Customer { get; set; }

public IList Products { get; set; }

public string State { get; set; }

public decimal Tax { get; set; }

public decimal ShippingCharges { get; set; }

public decimal Total { get; set; }

public ValidationErrorsCollection Validate()

{

var errors = new ValidationErrorsCollection();

ValidateThatTaxIsChargedInOhio(errors);

ValidateThatShippingIsChargedOnOrdersSentOutsideOfOhio(errors);

return errors;

}

private void ValidateThatShippingIsChargedOnOrdersSentOutsideOfOhio(

ValidationErrorsCollection errors)

{

if (State != "OH" && ShippingCharges == 0)

errors.Add(ShippingValidationMessage);

}

private void ValidateThatTaxIsChargedInOhio(ValidationErrorsCollection errors)

{

if (State == "OH" && Tax == 0)

errors.Add(TaxValidationMessage);

}

}

That’s better. Now when you read my Validate() method, you have more details about what validation rules we are testing for. This is a more natural way of writing the code when you write your tests first because it just makes sense to create one method for each test class.

Then your boss comes to you with a new rule — an Ohio customer only gets free shipping on their first order. This is a little bit trickier to test because now I’m dealing with data outside of the object that is being validated. In order to test this, I am going to have to call out to the database in order to see if this customer has an order with free shipping. How this is done I don’t really care about in this example, I just know that I’m going to have some class that determines whether a customer has an existing order with free shipping.

One of the cardinal rules of writing unit tests is that I need to stub out external dependencies (like a database), and in order to do that, I need to use dependency injection and take in those dependencies as interface parameters in my constructor. But another rule of DI is that I can’t take dependencies into entity objects. This means that I’m going to have to split the validation code out from my entity object. I’ll move them out into a class called OrderValidator, and it’ll look like this:

public class OrderValidator : IValidator

{

private readonly IGetOrdersForCustomerService _getOrdersForCustomerService;

public const string ShippingValidationMessage = "You cannot have free shipping outside of Ohio.";

public const string TaxValidationMessage = "You must charge tax in Ohio.";

public const string CustomersDoNotHaveMoreThanOneOrderWithFreeShippingValidationMessage =

"A customer cannot have more than one order with free shipping.";

public OrderValidator(IGetOrdersForCustomerService getOrdersForCustomerService)

{

_getOrdersForCustomerService = getOrdersForCustomerService;

}

public ValidationErrorsCollection Validate(Order order)

{

var errors = new ValidationErrorsCollection();

ValidateThatTaxIsChargedInOhio(order, errors);

ValidateThatShippingIsChargedOnOrdersSentOutsideOfOhio(order, errors);

ValidateThatCustomersDoNotHaveMoreThanOneOrderWithFreeShipping(order, errors);

return errors;

}

private void ValidateThatCustomersDoNotHaveMoreThanOneOrderWithFreeShipping(Order order, ValidationErrorsCollection errors)

{

var ordersForCustomer = _getOrdersForCustomerService.GetOrdersForCustomer(order.Customer);

if (order.IsNew)

ordersForCustomer.Add(order);

else

{

ordersForCustomer = ordersForCustomer.Where(o => o.Id != order.Id).ToList();

ordersForCustomer.Add(order);

}

if (ordersForCustomer.Count(o => o.ShippingCharges == 0) > 1)

errors.Add(CustomersDoNotHaveMoreThanOneOrderWithFreeShippingValidationMessage);

}

private void ValidateThatShippingIsChargedOnOrdersSentOutsideOfOhio(Order order, ValidationErrorsCollection errors)

{

if (order.State != "OH" && order.ShippingCharges == 0)

errors.Add(ShippingValidationMessage);

}

private void ValidateThatTaxIsChargedInOhio(Order order, ValidationErrorsCollection errors)

{

if (order.State == "OH" && order.Tax == 0)

errors.Add(TaxValidationMessage);

}

}

Notice that the OrderValidator class implements IValidator. This interface is pretty simple and looks like this:

public interface IValidator

{

ValidationErrorsCollection Validate(T obj);

}

Now that the validation class has been moved outside of the entity object, I need to know which IValidator<T> classes I need to run when I want to validate an object of a certain type. No worries, I can just create a class that will register validator objects by type. When the application starts up, I’ll tell my registration class to go search the assemblies for classes that implement IValidator<T>. Then when it’s time to validate, I can ask this registration class for all IValidator<T> types that it found for the type of entity that I need to validate and have it do the validation.

I think we could take this one step further. Currently our OrderValidator class is doing three different validations. You could argue that this violates the Single Responsibility Principle because this class is doing three things. But you might also be able to argue that it doesn’t violate the Single Reposibility Principle because OrderValidator is only doing one type of thing. Does it really matter?

What if you got a new validation rule that says that the State property on the Order can only contain one of the lower 48 U.S. states (any state other than Alaska or Hawaii). We also want to add this rule to a bunch of other entity objects that only should use the lower 48 states for their State property. Ideally, I would like to write this validation rule once and use it for all of those objects.

In order to do this, I’m going to create an interface first:

public interface IHasLower48State

{

public string State { get; }

}

I’ll put this interface on the Order class and all of the other classes that have this rule. Now I’ll write my validation code (after I write my tests, of course!). The only problem is that it doesn’t really fit inside OrderValidator anymore because I’m not necessarily validating an Order, I’m validating a IHasLower48State, which could be an Order, but it also could be something else.

What I really need now is a class for this one validation rule. I’m going to give it an uber-descriptive name.

public class Validate_that_state_is_one_of_the_lower_48_states : IValidator

{

public const string Message = "State must be one of the lower 48 states.";

public ValidationErrorsCollection Validate(IHasLower48State obj)

{

if (obj.State == "AK" || obj.State == "HI")

errors.Add(Message);

}

}

Some of you are freaking out because I put underscores in the class name. I put underscores in test class names, and it just seemed natural to use a very descriptive English class name that describes exactly what validation is being performed here. If you don’t like the underscores, then call it something descriptive without using underscores.

Now I change my registration class so that when you ask for all of the validation classes for an entity, it also checks for validation classes for interfaces that the entity implements.

What’s great about is that if an entity object implements IHasLower48State, it will now pick up this validation rule for free. My registration class has auto-wired it for me, so I don’t have to configure anything. I get functionality for free with no extra work! I’m creating cross-cutting validation rules where I’m validating types of entities.

Conclusion

If you made it to this point, you’re a dedicated reader after making it through all of that (or you just skipped to the end). I wrote all of this not only to show how I do validation, but also to show you the thought process I go through and the hows and whys behind how I refactor things and find better ways to write code.

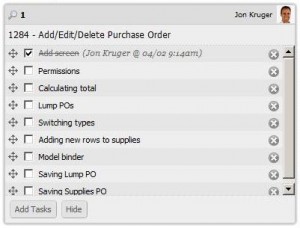

You can use an online tool, a text file, pen and paper, or whatever else you like to keep track of tasks. I use

You can use an online tool, a text file, pen and paper, or whatever else you like to keep track of tasks. I use

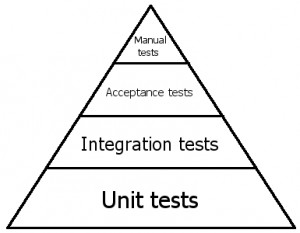

At the bottom of the triangle we have unit tests. These tests are testing code, individual methods in classes, really small pieces of functionality. We mock out dependencies in these tests so that we can test individual methods in isolation. These tests are written using testing frameworks like NUnit and use mocking frameworks like Rhino Mocks. Writing these kinds of tests will help us prove that our code is working and it will help us design our code. They will ensure that we only write enough code to make our tests pass. Unit tests are the foundation of a maintainable codebase.

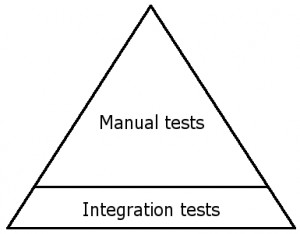

At the bottom of the triangle we have unit tests. These tests are testing code, individual methods in classes, really small pieces of functionality. We mock out dependencies in these tests so that we can test individual methods in isolation. These tests are written using testing frameworks like NUnit and use mocking frameworks like Rhino Mocks. Writing these kinds of tests will help us prove that our code is working and it will help us design our code. They will ensure that we only write enough code to make our tests pass. Unit tests are the foundation of a maintainable codebase. I find that the testing triangle on most projects tends to look more like this triangle. There are some automated integration tests, but these tests don’t use mocking frameworks to isolate dependencies, so they are slow and brittle, which makes them less valuable. An enormous amount of manpower is spent on manual testing.

I find that the testing triangle on most projects tends to look more like this triangle. There are some automated integration tests, but these tests don’t use mocking frameworks to isolate dependencies, so they are slow and brittle, which makes them less valuable. An enormous amount of manpower is spent on manual testing.