As I was writing my post on aspiring to be a Software Craftsman, I was thinking about why we have such a low standard of quality in our industry. Clearly there is a problem when there are so many software projects out there that are rewrites of previous projects, and businesses are hamstrung by software that is too difficult to change.

I’m very much an optimist most of the time, honest! But I can’t help notice all kinds of things that don’t sit well with me.

Poor university training

I had 5 classes in my 4 years of college that were relevant to software development. I graduated in 2002. They started us out on C++, which was a fair choice in 1998. I was not able to take a database course until my senior year. During my last semester they finally introduced an intro to Java course which I was able to take. No courses on .NET, no courses in PHP, no courses in software design, no courses on techniques that could’ve made me successful (like unit testing).

The software industry is constantly changing, and many university programs are not keeping up. When you graduate you know just enough to be dangerous but not enough to really know what you’re doing. 4 years is a long time, you should be able to learn a lot during that time.

Post-college training is not encouraged

If you want to learn about software development, there is always a user group you can go to, a conference you can attend, thousands of blogs you can read, podcasts to listen to, and on and on. There is a wealth of information out there, but the average developer is not taking advantage of it. Some of these people don’t care to learn. But I think that most people care about doing a good job. The problem is that their employer does not encourage it. If someone doesn’t want to spend their time outside of work reading blogs and attending conferences, that’s their choice. There is nothing wrong with leaving your work at work and spending time with your family or doing other things. But most employers don’t offer enough training during work hours, whether that is organizing lunch and learns, paying for their devs to go to CodeMash, bringing in guest speakers, etc. It costs a lot of money to build software. Shouldn’t employers be willing to spend a little more money to train their employers so that they can build better quality software?

People don’t know what they don’t know

There are a lot of developers out there don’t know software design techniques and concepts like unit testing, what SOLID stands for, why you should use an IoC container, and why you should design with interfaces. I would guess that a majority of these developers would love to know this stuff, but they don’t know that they don’t know it. A year ago I had never used Rhino Mocks, and I didn’t know what SOLID stood for or what an IoC container was. I thought I was a good developer, and I was in charge of a fairly large project, but I didn’t know that I didn’t know these things. Now that I’ve learned all that stuff I am a much better developer than I was a year ago. But now I’m wondering what other important stuff I don’t know.

My point is that I’m sure that it would really help developers if there was some way that they could go through some kind of training that would teach advanced software development topics like the ones that I mentioned. I see a lot of junior developers take Microsoft certification tests. It is unlikely that Microsoft certification tests will teach you any of the concepts that I mentioned. You will, on the other hand, memorize a lot of stuff that you can find on Google. I’m not saying that studying for and taking those tests won’t teach you anything, but they’re not going to teach you how to write quality software.

Low business expectations

Many IT managers and executives have very low expectations for their software. Having to rewrite software is widely accepted as normal these days. Wouldn’t it be so much better and cheaper to change your existing software when you need it to do something different?

The problem is that so much of our software is too difficult to change due to bad design, lack of testing, and many other factors. Many businesses do very little to address the root problem — that their developers don’t have the adequate training or don’t have the ability to write good software that can last.

Many IT managers believe that the best that they can do is this disposable software, so they decide that they might as well pay less money if they’re going to get low quality, so they turn to offshoring. Either way, they’re still getting the same low quality software and they’re wasting money rewriting their software every several years.

Short term thinking

Many business get sucked into short term thinking. I’m sure there are many ways that this can work, but here’s one way I can see this happening.

- Executives want to show results because they’re worried about their stock price. They demand results this year.

- IT Manager is under pressure to deliver something this year, possibly with a limited budget.

- Developers are under pressure to deliver something and have really tight deadlines.

- Developers throw something together and finish the project. IT Managers show it to executives who like what they see. Executives show it at a trade show and shareholders are happy.

- Two years later the software does not meet the needs of the business because the app is unmaintainable and they start budgeting money to bring in consultants to rewrite it.

It would’ve made much more sense to invest more time and money into the project the first time so that it wouldn’t have to be rewritten two years later. But because the business needs to show progress to the shareholders they won’t do it this way. This is a tough situation, and I can understand why executives make some of these decisions, but it doesn’t seem to make sense to me.

Little or no accountability

If you’re a developer and you write bad code, you probably aren’t going to lose your job unless there is a recession, you do a really really bad job, or just quit showing up for work. If a civil engineer builds a bridge that fails after 5 years, I guarantee you he won’t be working on bridges anymore, at least not at the same company. There is very little pressure in the software industry to write quality software.

In closing…

I don’t know what the solution to this problem is because it is a problem on so many fronts. But I do have control over what’s around me. I can aspire to become a software craftsman — someone who designs and writes quality software. I can hold myself accountable and make sure that I have high standards. And I can try and teach other people to do the same.

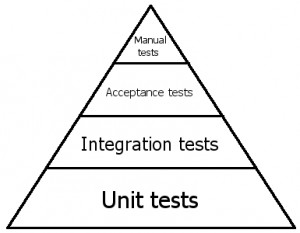

At the bottom of the triangle we have unit tests. These tests are testing code, individual methods in classes, really small pieces of functionality. We mock out dependencies in these tests so that we can test individual methods in isolation. These tests are written using testing frameworks like NUnit and use mocking frameworks like Rhino Mocks. Writing these kinds of tests will help us prove that our code is working and it will help us design our code. They will ensure that we only write enough code to make our tests pass. Unit tests are the foundation of a maintainable codebase.

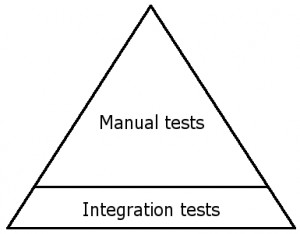

At the bottom of the triangle we have unit tests. These tests are testing code, individual methods in classes, really small pieces of functionality. We mock out dependencies in these tests so that we can test individual methods in isolation. These tests are written using testing frameworks like NUnit and use mocking frameworks like Rhino Mocks. Writing these kinds of tests will help us prove that our code is working and it will help us design our code. They will ensure that we only write enough code to make our tests pass. Unit tests are the foundation of a maintainable codebase. I find that the testing triangle on most projects tends to look more like this triangle. There are some automated integration tests, but these tests don’t use mocking frameworks to isolate dependencies, so they are slow and brittle, which makes them less valuable. An enormous amount of manpower is spent on manual testing.

I find that the testing triangle on most projects tends to look more like this triangle. There are some automated integration tests, but these tests don’t use mocking frameworks to isolate dependencies, so they are slow and brittle, which makes them less valuable. An enormous amount of manpower is spent on manual testing.