LINQ to SQL is a great tool, but when you’re using it in an n-tier scenario, there are several problems that you have to solve. A simple example is a web service that allows you to retrieve a record of data and save a record of data when the object that you are loading/saving had child lists of objects. Here are some of the problems that you have to solve:

1) How can you create these web services without having to create a separate set of entity objects (that is, we want to use the entity objects that the LINQ to SQL designer generates for us)?

2) What do we have to do to get LINQ to SQL to work with entities passed in through web services?

3) How do you create these loading/saving web services while keeping the amount of data passed across the wire to a minimum?

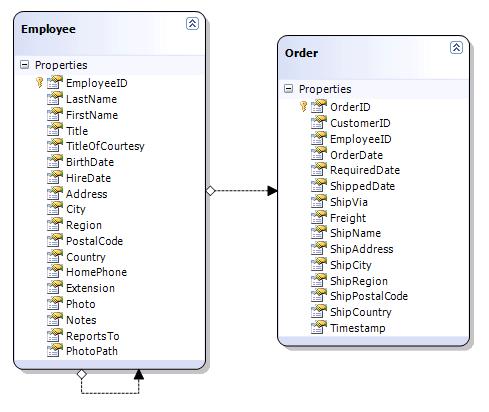

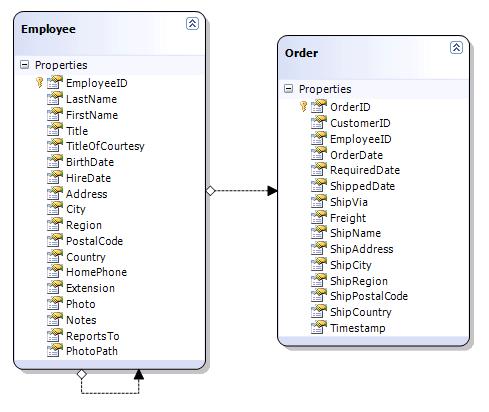

I created a sample project using the Northwind sample database. The first thing to do is to create your .dbml file and then drag tables from the Server Explorer onto the design surface. I have something that looks like this:

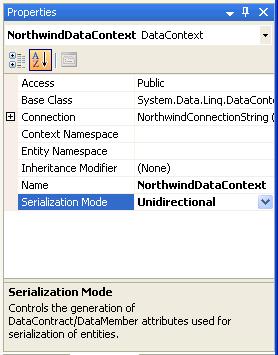

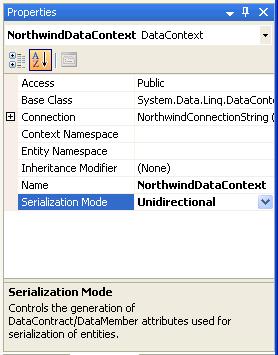

I’m also going to click on blank space in the design surface, go to the Properties window, and set the SerializationMode to Unidirectional. This will put the [DataContract] and [DataMember] attributes on the designer-generated entity objects so that they can be used in WCF services.

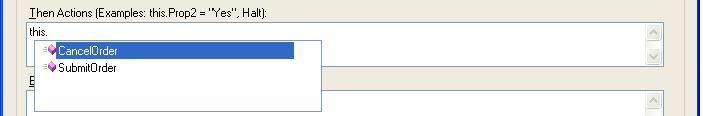

Now I’ll create some web services that look something like this:

public class EmployeeService

{

public Employee GetEmployee(int employeeId)

{

NorthwindDataContext dc = new NorthwindDataContext();

Employee employee = dc.Employees.FirstOrDefault(e => e.EmployeeID == employeeId);

return employee;

}

public void SaveEmployee(Employee employee)

{

// TODO

}

}

I have two web service methods — one that loads an Employee by ID and one that saves an Employee.

Notice that both web service methods are using the entity objects generated by LINQ to SQL. There are some situations when you will not want to use the LINQ to SQL generated entities. For example, if you’re exposing a public web service, you probably don’t want to use the LINQ to SQL entities because that can make it a lot harder for you to refactor your database or your object model without having to change the web service definition, which could break code that calls the web service. In my case, I have a web service where I own both sides of the wire and this service is not exposed publicly, so I don’t have these concerns.

The GetEmployee() method is fairly straight-forward — just load up the object and return it. Let’s look at how we should implement SaveEmployee().

In order for the DataContext to be able to save an object that wasn’t loaded from the same DataContext, you have to let the DataContext know about the object. How you do this depends on whether the object has ever been saved before.

How you make this determination is based on your own convention. Since I’m dealing with integer primary keys with identity insert starting at 1, I can assume that if the primary key value is < 1, this object is new.

Let's create a base class for our entity object called BusinessEntityBase and have that class expose a property called IsNew. This property will return a boolean value based on the primary key value of this object.

namespace Northwind

{

[DataContract]

public abstract class BusinessEntityBase

{

public abstract int Id { get; set; }

public virtual bool IsNew

{

get { return this.Id <= 0; }

}

}

}

Now we have to tell Employee to derive from BusinessEntityBase. We can do this because the entities that LINQ to SQL generates are partial classes that don't derive from any class, so we can define that in our half of the partial class.

namespace Northwind

{

public partial class Employee : BusinessEntityBase

{

public override int Id

{

get { return this.EmployeeID; }

set { this.EmployeeID = value; }

}

}

}

Now we should be able to tell if an Employee object is new or not. I'm also going to do the same thing with the Order class since the Employee object contains a list of Order objects.

namespace Northwind

{

public partial class Order : BusinessEntityBase

{

public override int Id

{

get { return this.OrderID; }

set { this.OrderID = value; }

}

}

}

OK, let's start filling out the SaveEmployee() method.

public void SaveEmployee(Employee employee)

{

NorthwindDataContext dc = new NorthwindDataContext();

if (employee.IsNew)

dc.Employees.InsertOnSubmit(employee);

else

dc.Employees.Attach(employee);

dc.SubmitChanges();

}

Great. So now I can call the GetEmployee() web method to get an employee, change something on the Employee object, and call the SaveEmployee() web method to save it. But when I do it, nothing happens.

The problem is with this line:

public void SaveEmployee(Employee employee)

{

NorthwindDataContext dc = new NorthwindDataContext();

if (employee.IsNew)

dc.Employees.InsertOnSubmit(employee);

else

dc.Employees.Attach(employee); // <-- PROBLEM HERE

dc.SubmitChanges();

}

The Attach() method attaches the entity object to the DataContext so that the DataContext can save it. But the overload that I called just attached the entity to the DataContext and didn't check to see that anything on the object had been changed. That doesn't do us a whole lot of good. Let's try this overload:

public void SaveEmployee(Employee employee)

{

NorthwindDataContext dc = new NorthwindDataContext();

if (employee.IsNew)

dc.Employees.InsertOnSubmit(employee);

else

dc.Employees.Attach(employee, true); // <-- UPDATE

dc.SubmitChanges();

}

This second parameter is going to tell LINQ to SQL that it should treat this entity as modified so that it needs to be saved to the database. Now when I call SaveEmployee(), I get an exception when I call Attach() that says:

An entity can only be attached as modified without original state if it declares a version member or does not have an update check policy.

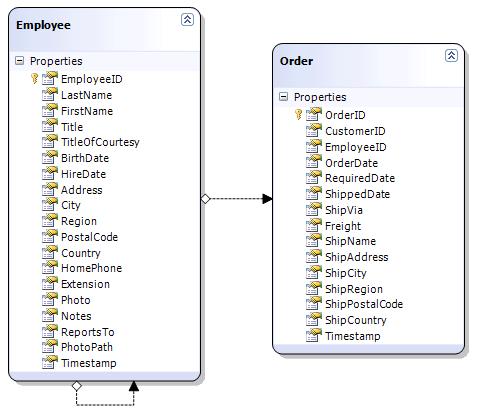

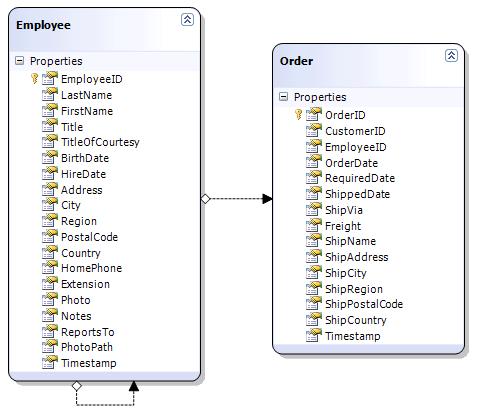

What this means is that my database table does not have a timestamp column on it. Without a timestamp, LINQ to SQL can't do it's optimistic concurrency checking. No big deal, I'll go add timestamp columns to the Employees and Orders tables in the database. I'll also have to go into my DBML file and add the column to the table in there. You can either add a new property to the object by right-clicking on the object in the designer and selecting Add / Property, or you can just delete the object from the designer and then dragging it back on from the Server Explorer.

Now the DBML looks like this:

Now let's try calling SaveEmployee() again. This time it works. Here is the SQL that LINQ to SQL ran:

UPDATE [dbo].[Employees]

SET [LastName] = @p2, [FirstName] = @p3, [Title] = @p4, [TitleOfCourtesy] = @p5, [BirthDate] = @p6, [HireDate] = @p7, [Address] = @p8, [City] = @p9, [Region] = @p10, [PostalCode] = @p11, [Country] = @p12, [HomePhone] = @p13, [Extension] = @p14, [Photo] = @p15, [Notes] = @p16, [ReportsTo] = @p17, [PhotoPath] = @p18

WHERE ([EmployeeID] = @p0) AND ([Timestamp] = @p1)

SELECT [t1].[Timestamp]

FROM [dbo].[Employees] AS [t1]

WHERE ((@@ROWCOUNT) > 0) AND ([t1].[EmployeeID] = @p19)

-- @p0: Input Int (Size = 0; Prec = 0; Scale = 0) [5]

-- @p1: Input Timestamp (Size = 8; Prec = 0; Scale = 0) [SqlBinary(8)]

-- @p2: Input NVarChar (Size = 8; Prec = 0; Scale = 0) [Buchanan]

-- @p3: Input NVarChar (Size = 6; Prec = 0; Scale = 0) [Steven]

-- @p4: Input NVarChar (Size = 13; Prec = 0; Scale = 0) [Sales Manager]

-- @p5: Input NVarChar (Size = 3; Prec = 0; Scale = 0) [Mr.]

-- @p6: Input DateTime (Size = 0; Prec = 0; Scale = 0) [3/4/1955 12:00:00 AM]

-- @p7: Input DateTime (Size = 0; Prec = 0; Scale = 0) [10/17/1993 12:00:00 AM]

-- @p8: Input NVarChar (Size = 15; Prec = 0; Scale = 0) [14 Garrett Hill]

-- @p9: Input NVarChar (Size = 6; Prec = 0; Scale = 0) [London]

-- @p10: Input NVarChar (Size = 0; Prec = 0; Scale = 0) [Null]

-- @p11: Input NVarChar (Size = 7; Prec = 0; Scale = 0) [SW2 8JR]

-- @p12: Input NVarChar (Size = 2; Prec = 0; Scale = 0) [UK]

-- @p13: Input NVarChar (Size = 13; Prec = 0; Scale = 0) [(71) 555-4848]

-- @p14: Input NVarChar (Size = 4; Prec = 0; Scale = 0) [3453]

-- @p15: Input Image (Size = 21626; Prec = 0; Scale = 0) [SqlBinary(21626)]

-- @p16: Input NText (Size = 448; Prec = 0; Scale = 0) [Steven Buchanan graduated from St. Andrews University, Scotland, with a BSC degree in 1976. Upon joining the company as a sales representative in 1992, he spent 6 months in an orientation program at the Seattle office and then returned to his permanent post in London. He was promoted to sales manager in March 1993. Mr. Buchanan has completed the courses "Successful Telemarketing" and "International Sales Management." He is fluent in French.]

-- @p17: Input Int (Size = 0; Prec = 0; Scale = 0) [2]

-- @p18: Input NVarChar (Size = 37; Prec = 0; Scale = 0) [http://accweb/emmployees/buchanan.bmp]

-- @p19: Input Int (Size = 0; Prec = 0; Scale = 0) [5]

Notice that it passed back all of the properties in the SQL statement -- not just the one that I changed (I only changed one property when I made this call). But isn't it horribly ineffecient to save every property when only one property changed?

Well, you don't have much choice here. Now there is another overload of Attach() that takes in the original version of the object instead of the boolean parameter. In other words, it is saying that it will compare your object with the original version of the object and see if any properties are different, and then only update those properties in the SQL statement.

Unfortunately, there's no good way to use this overload in this case, nor do I think you would want to. I suppose you could load up the existing version of the entity from the database and then pass that into Attach() as the "original", but now we're doing even more work -- we're doing a SELECT that selects the entire row, and then we're doing an UPDATE that only updates the changed properties. I would rather stick with the one UPDATE that updates everything.